Memory in AI Agents

Imagine talking to a friend who forgets everything you’ve ever said. Every conversation starts from zero. No memory, no progress, no context. It would feel awkward, exhausting and impersonal right ? Unfortunately, that is exactly how most AI systems behave today. They’re smart, but they lack something very crucial: memory.

Introduction: Why is AI Naturally "Forgetful" ?

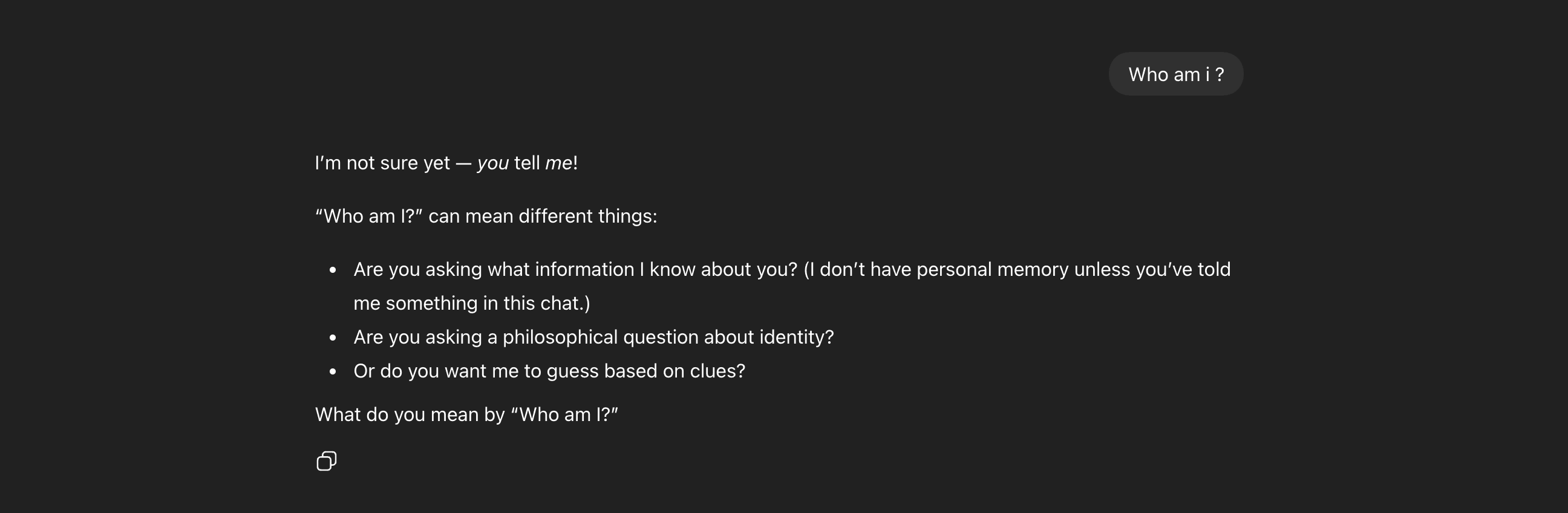

Consider this very use case:

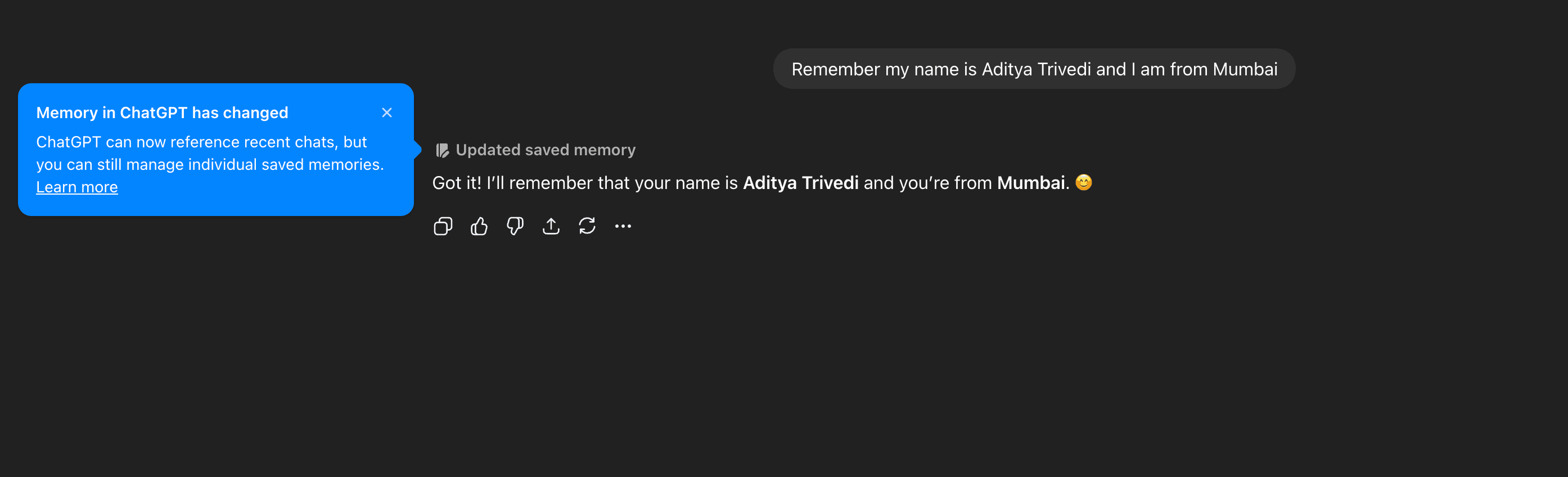

I created a fresh new account on ChatGPT and asked it Who am I ? The answer that I got is ‘I’m not sure yet - you tell me!‘. This means that it does not have any context or memory initially of who I am . Now, the next thing I tell it is to remember that ‘My name is Aditya Trivedi and I am from Mumbai’.

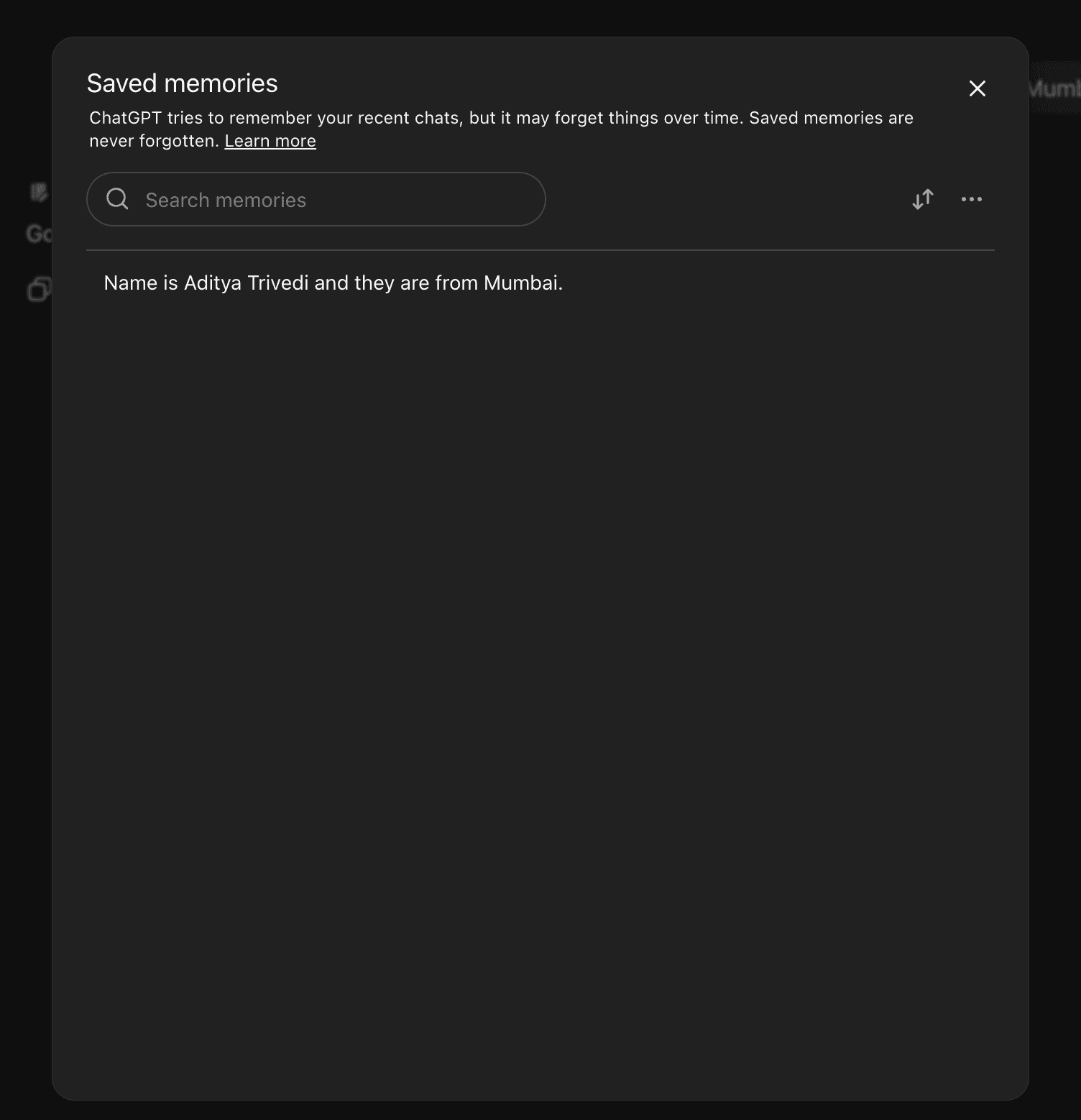

What do we see from the image above ? We get that Memory in ChatGPT has changed meaning now the AI has a memory of who I am and where do I live. It also tries to remember your recent chats but may forget over time.

Now when I ask the same question, ChatGPT will now be able to answer the question, why ? Because now it has the memory of my name and where do I live.

Tools like ChatGPT feel helpful until you find yourself repeating instructions or preferences, again and again. To build agents that can learn, evolve and collaborate, real memory isn’t just beneficial - it’s essential.

To understand memory, we first need to understand how tools like ChatGPT work under the hood.

-

The Stateless Problem:

By default, AI models are stateless. This means that they do not have built-in memory of past requests. If you say “Hello” and AI replies, and then when you say “My name is Aditya", the AI will process that specific message. The catch here is that if you send a new message without the previous context, the AI will treat it as a brand-new conversation. One way to keep the chat going could be that the developer actually has to send entire conversation history back to AI with every single message you type.

-

Context Window Limit:

Considering the above solution , you may ask “Why now send the entire history forever ?”. Hehe, that’s not possible. AI models have a limit called the Context Window. In simple words you can think of it as AI’s short term-attention span.

What do we mean by Memory in AI Agents ?

Memory is the ability to retain and remember/recall relevant information from the context of the user’s input across time, tasks and multiple user interactions. It allows agents to remember what happened in the past and use the relevant information to improve behaviour in the future.

It is not about storing the chat history, it is about giving more and more context to the LLMs and building a persistent state which evolves.

Think of it like a human memory. If you meet a friend after say 15 years, it is obvious that you would not remember everything. You remember the important stuff like their name, where they live, what are their likings in food, etc. This is the exact same thing the Memory Layer does for AI.

The 4 Types of AI Memory:

Short Term Memory:

This is like a temporary memory. It will exist only for the current conversation or task and will be deleted once the task is finished. For eg, you are going to a restaurant and you order a coffee and a burger and you get the order number 132. After 10 minutes, the waiter calls for order number 132. Since you have memorized the number as your order number, you get the food. Once you complete eating, you leave, you forget the number. You don’t remember the order number for the rest of your life.

Long-Term Memory:

This memory lasts forever. It will stay across different chats, days months or even if you refresh your browser 😅. It is now divider into 3 subtypes:

-

Factual Memory: Retains user preferences, communication style, domain context etc. For eg “You prefer TypeScript output and detailed answers”.

-

Episodic Memory: Remembers specific past interactions or outcomes. For eg “Last time when i used this script, it destroyed my performance”.

-

Semantic Memory: Stores generalized, abstract knowledge acquired over time. For eg “Tasks involving JSON parsing usually stress you out, want a quick template?”

RAG ≠ Memory

While both RAG (Retrieval-Augmented-Generation) and memory systems retrieve information to support LLMs, they both solve very different problems.

RAG brings external knowledge into the prompt at inteference time. It is fundamentally stateless meaning it has no awareness of previous interactions, user identity, or how the current query relates to past conversations whereas

Memory, brings continuation. It will capture user preferences, past queries, decisions and failures and make them available in future interactions.

In a world where every agent has access to the same models and tools, memory will be the differentiator. Not just the agent that responds — the one that remembers, learns, and grows with you will win.